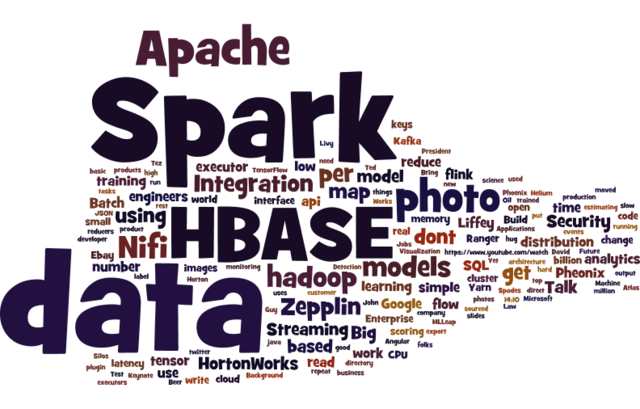

Apache Spark

Data: 16.09.2017 / Rating: 4.8 / Views: 837Gallery of Video:

Gallery of Images:

Apache Spark

Sep 18, 2016Apache Spark is an open source cluster computing system that aims to make data analytics fast both fast to run and fast to write. To run programs faster, S Apache Spark FAQ. How does Spark relate to Apache. Spark is a fast and general cluster computing system for Big Data. It provides highlevel APIs in Scala, Java, Python, and R, and an optimized. A beginner's guide to Spark in Python based on 9 popular questions, such as how to install PySpark in Jupyter Notebook, best practices. spark Mirror of Apache Spark I have modified SparkHadoopWriter so that executors and the driver always use consistent JobIds during the hadoop commit. Stepbystep instructions on how to build Apache Spark machine learning application on HDInsight Spark clusters using Jupyter notebook Hadoop and Apache Spark are both bigdata frameworks, but they don't really serve the same purposes. Apache Spark is an opensource clustercomputing framework. Originally developed at the University of California, Berkeley's AMPLab, the Spark codebase was later. Apache Spark is a fast, inmemory data processing engine with development APIs to allow data workers to execute streaming, machine learning or SQL. Apache Spark is a fast and general engine for big data processing, with builtin modules for streaming, SQL, machine learning and graph processing. We are expert in Spark training, for the last couple of years implementing Spark applications, provides spark online training in USA, India Canada A thorough and practical introduction to Apache Spark, a lightning fast, easytouse, and highly flexible big data processing engine. Apache Spark tutorial introduces you to big data processing, analysis and Machine Learning (ML) with PySpark. Taming Big Data With Apache Spark. Apache Spark is an opensource engine developed specifically for handling largescale data processing and analytics. This tutorial is a stepbystep guide to install Apache Spark. Installation of JAVA 8 for JVM and has examples of Extract, Transform and Load operations. Simplify big data and AI with a platform from the team that started Apache Spark. Intel: Tech Talk1: Distributed Deep Learning At Scale on Apache Spark with BigDL Databricks: TechTalk2: Easy, Scalable, Faulttolerant stream processing with. Why it is a hot topic in Big Data forums? Is Apache Spark going to replace hadoop? If you are into BigData analytics business then, should you. Apache Spark Introduction Learn Apache Spark in simple and easy steps starting from Introduction, RDD, Installation, Core Programming, Deployment, Advanced Spark. Fast, flexible, and developerfriendly, Apache Spark is the leading platform for largescale SQL, batch processing, stream processing, and machine learning Apache Spark for Azure HDInsight is an open source processing framework that runs largescale data analytics applications. This definition explains Apache Spark, which is an open source parallel process computational framework primarily used for data engineering and analytics. Apache Spark RDD Learn Apache Spark in simple and easy steps starting from Introduction, RDD, Installation, Core Programming, Deployment, Advanced Spark. Apache Spark is an open source big data processing framework built around speed, ease of use, and sophisticated analytics. In this article, Srini Penchikala talks. Learn how you can create and manage Apache Spark clusters on AWS. Use Apache Spark on Amazon EMR for Stream Processing. Dive right in with 15 handson examples of analyzing large data sets with Apache. Watch videoApache Spark is a powerful platform that provides users with new ways to store and make use of big data. In this course, get up to speed with Spark Apache Spark is a powerful open source processing engine built around speed, ease of use, and sophisticated analytics. It was originally developed at UC Berkeley in 2009. Spark is the open standard for flexible inmemory data processing that enables batch, realtime, and advanced analytics on the Apache Hadoop platform. HDInsight Spark quickstart on how to create an Apache Spark cluster in HDInsight. SparkHub is the community site of Apache Spark, providing the latest on spark packages, spark releases, news, meetups, resources and events all in one place. The documentation linked to above covers getting

Related Images:

- Lys Edebiyat Pdf Fem

- Al quattordicesimo cieloepub

- Driver for Ralink Rt3290zip

- Basicstatisticsunderstandingconventionalmethod

- Online excel sheet password cracker

- Textify Columnize and Paginate Your Long Textrar

- Per i principi dello Stato di dirittopdf

- Manual Da Tv Led 32 Philco Ph32M Led A4

- Sample Pastor Greeting Letters For Church Anniversary

- CADbro 2016

- Drake the worst jhene aiko download

- Adrian Bejan Constructal Theory Solutions

- Pbr brake hose catalogue

- Libreoffice Export Pdf Fit To Page

- Studio 21 Glossar Deutsch Spanisch A1

- Last resort season 2

- Ana Life Skills Test For Grade 3

- Proposal sunatan massal karang taruna

- Love me knight Kiss me Licia Vol 1pdf

- Cell And Molecular Biology Karp 7Th Pdf

- Jvc Ca Dxj21 Service Manuals

- La verita di Ameliaepub

- AMD Radeon R5 Graphics Driverzip

- Autorun virus remover

- How To Change The Front Fog Lights On A Mini Copper

- Maths Class 9 Rs Agrawal With Answer

- The legend of

- Book Of Psychology By Rakhshanda Shahnaz

- Kaliopi Crno I Belo Mp3 Download

- 2001 Taurus Antilock Brake Diagram

- Como desbloquear arquivos protegidos com senha

- I temi dei balilla Mussolini visto dai bambinipdf

- Vivere a Napoli Carta stracciaepub

- Protein Purification The Basics Garland Science

- Lucy Willis South India and Other Travels 1991

- Hoyle card games

- Automating ETL Complete 5 ETL Projectsrar

- Breitling brochure pdf

- A New Pair of Glasses

- Principles of economics by roberto g medina pdf

- Lexo kurani shqip

- Telecharger Mega Man Xtreme 2 Nintendo3DSzip

- Download For Free Winged Migration DVDRip

- Libro de posiciones de kamasutra pdf

- Xpenology Esxi Change Serial

- Manni Paola La lingua di Dante

- 1394 Ohci drivers Windows 7zip

- Sec 2 English Exam Papers

- Python Machine Learning Raschka Sebastian

- Make You Feel My Love Music Score

- Norton antivirus corporate edition

- Harvey Part Two The Pit Series

- American Panel Walk In Coolers Trouble Shooting

- Position Pieces for Cello

- Examenes De Lengua 6O Primaria Santillana

- Over 50 and Fit

- Toshiba E Studio 195 driverszip

- Express Movers Moving Company WordPress Theme rar

- Absal Absal

- Cosmografie Ediz italiana e inglesemp3

- Download poze furien sv

- External Works Roads And Drainage A Practical Guide

- Sintesis de insulina pdf

- Casio W800h Manual

- Microsoft office enterprise

- Lightning Bay Friday Harbor 5

- Le eta della vitapdf

- Origine S Les Forets Primaires Dans Le Monde

- Ideologi Kaum Intelektual Suatu Wawasan Islam

- Coeur De Lion By Ariana Reines

- No Heat In

- Worksheet g2 mixed combinatorics answers

- Faktor penyebab diare pdf

- Giovanna drco

- Mach3 plasma screen set download times